AdComfortable1514 4h ago • 100%

My solution has been to left click , select "inspect element" to open the browsers HTML window.

Then zoom out the generator as far as it goes , and scroll down so the entire image gallery (or a part of it at least) is rendered within the browser.

The ctrl+c copy the HTML and paste it in notepad++ , and use regular expressions to sort out the image prompts (and image source links) from the HTML code

Not exactly a good fix , but it gets the job done at least.

AdComfortable1514 7d ago • 100%

Big ask.

Personally , I'd be overjoyed to just have embeddings available on the site.

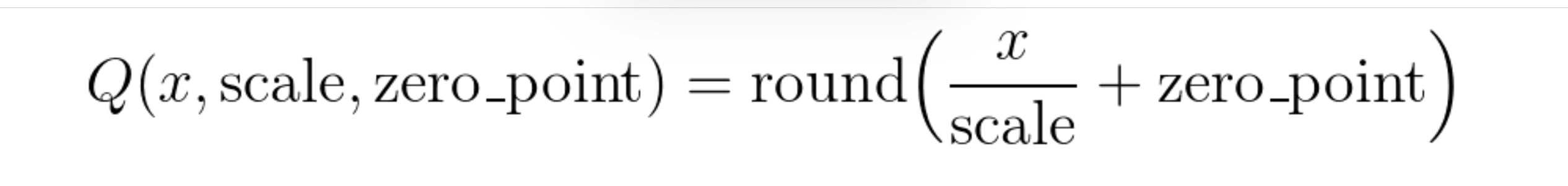

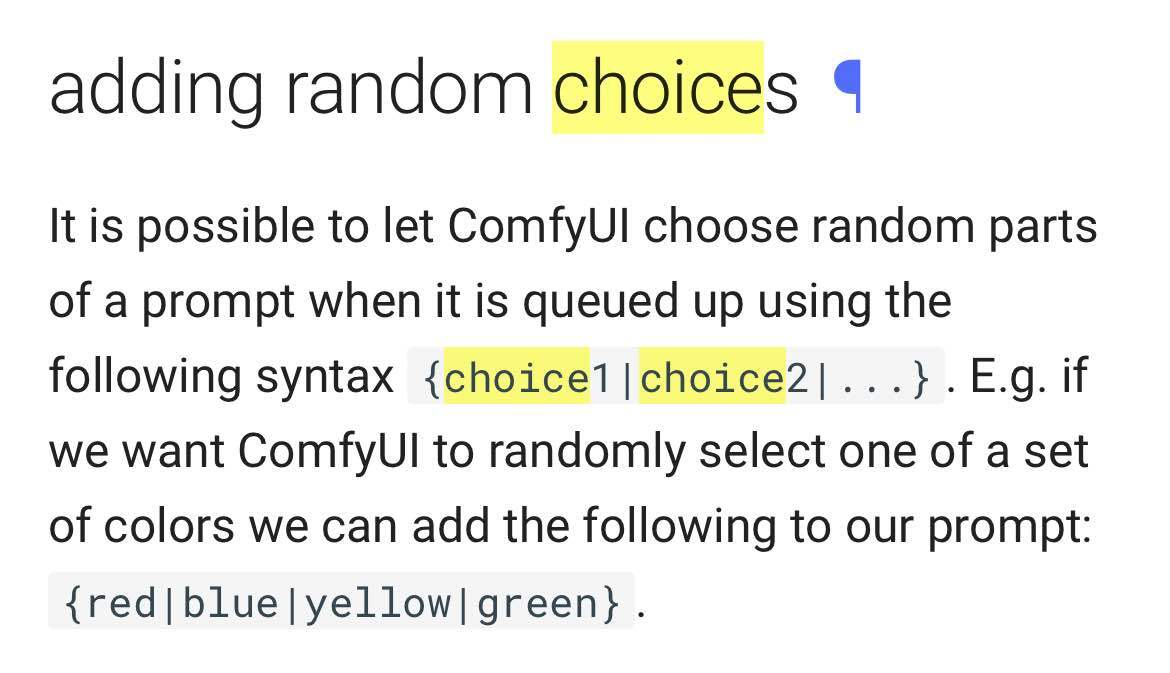

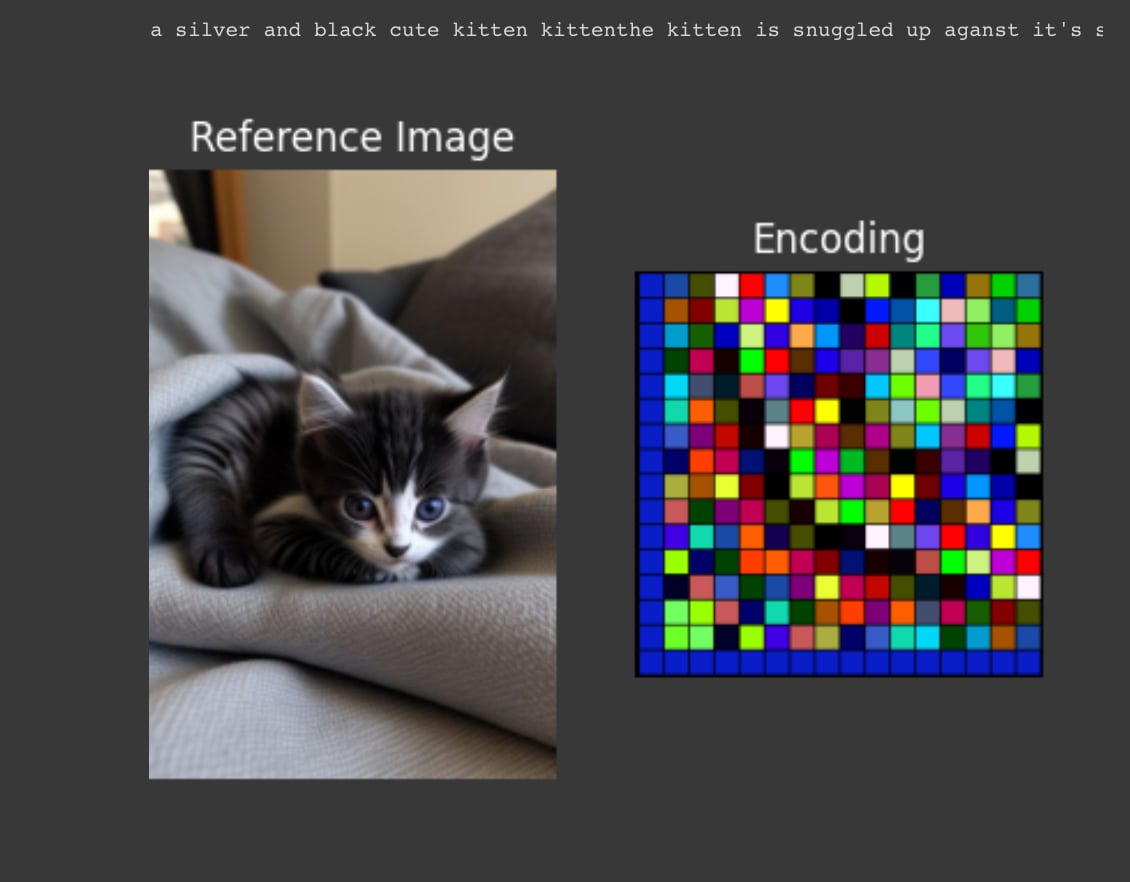

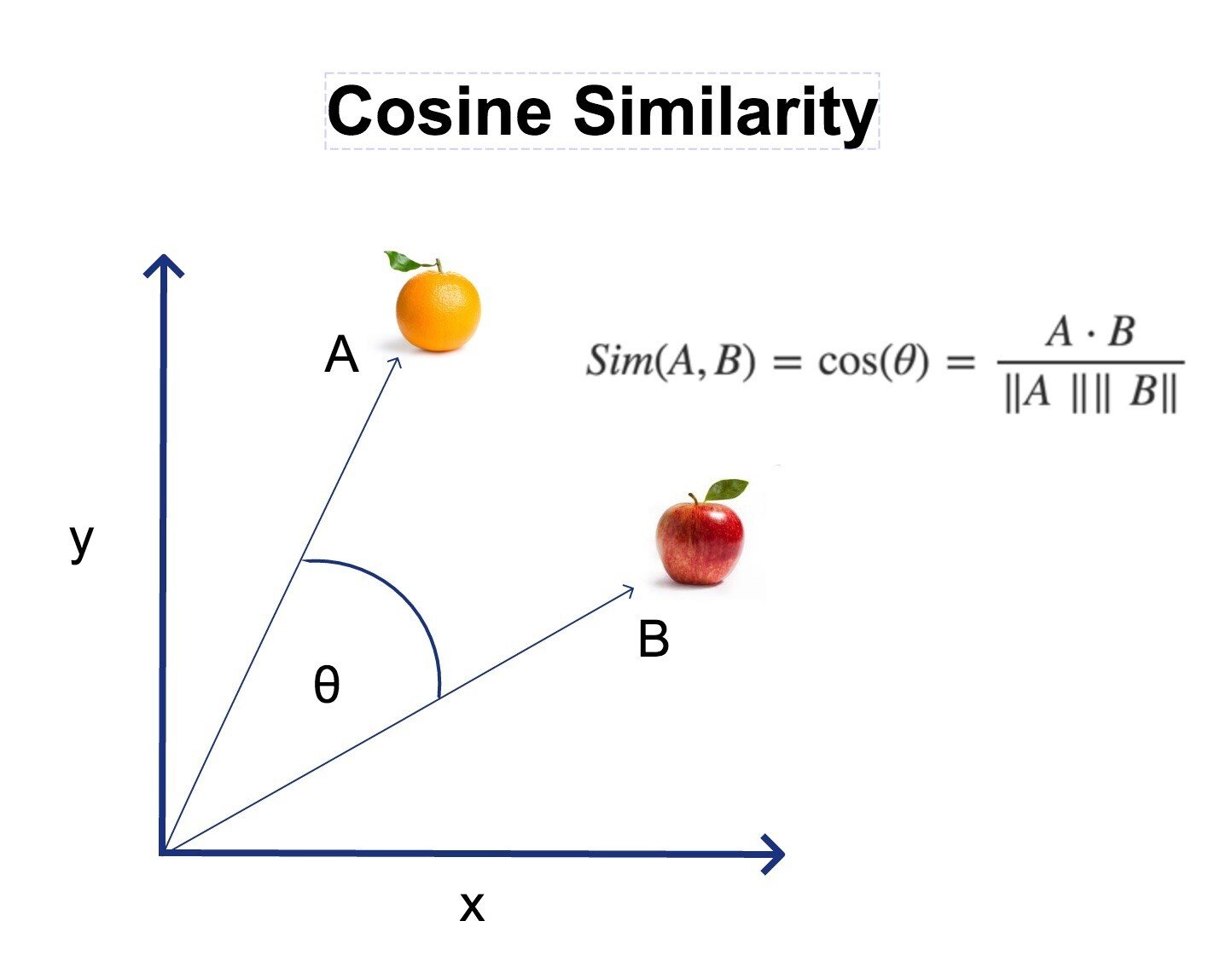

Image shows list of prompt items before/after running 'remove duplicates' from a subset of the Adam Codd huggingface repo of civitai prompts: https://huggingface.co/datasets/AdamCodd/Civitai-2m-prompts/tree/main The tool I'm building "searches" existing prompts similiar to text or images.  Like the common CLIP interrogator , but better. Link to notebook here: https://huggingface.co/datasets/codeShare/fusion-t2i-generator-data/blob/main/Google%20Colab%20Jupyter%20Notebooks/fusion_t2i_CLIP_interrogator.ipynb For pre-encoded reference , can recommend experimenting setting START_AT parameter to values 10000-100000 for added variety. //---// Removing duplicates from civitai prompts results in a 90% reduction of items! Pretty funny IMO. It shows the human tendency to stick to the same type of words when prompting. I'm no exception. I prompt the same all the time. Which is why I'm building this tool so that I don't need to think about it. If you wish to search this set , you can use the notebook above. Unlike the typical pharmapsychotic CLIP interrogator , I pre-encode the text corpus ahead of time. //---// Additionally , I'm using quantization on the text corpus to store the encodings as unsigned integers (torch.uint8) instead of float32 , using this formula:  For the clip encodings , I use scale 0.0043. A typical zero_point value for a given encoding can be 0 , 30 , 120 or 250-ish. The TLDR is that you divide the float32 value with 0.0043 , round it up to the closest integer , and then increase the zero_point value until all values within the encoding is above 0. This allows us to accurately store the values as unsigned integers , torch.uint8 . This conversion reduces the file size to less than 1/4th of its original size. When it is time to calculate stuff , you do the same process but in reverse. For more info related to quantization, see the pytorch docs: https://pytorch.org/docs/stable/quantization.html //---// I also have a 1.6 million item fanfiction set of tags loaded from https://archiveofourown.org/ Its mostly character names. They are listed as fanfic1 and fanfic2 respectively. //---// ComfyUI users should know that random choice {item1|item2|...} exists as a built in-feature.  //--// Upcoming plans is to include a visual representation of the text_encodings as colored cells within a 16x16 grid. A color is an RGB value (3 integer values) within a given range , and 3 x 16 x 16 = 768 , which happens to be the dimension of the CLIP encoding EDIT: Added it now  //---// Thats all for this update.

AdComfortable1514 1w ago • 100%

It is good that you ask :)!

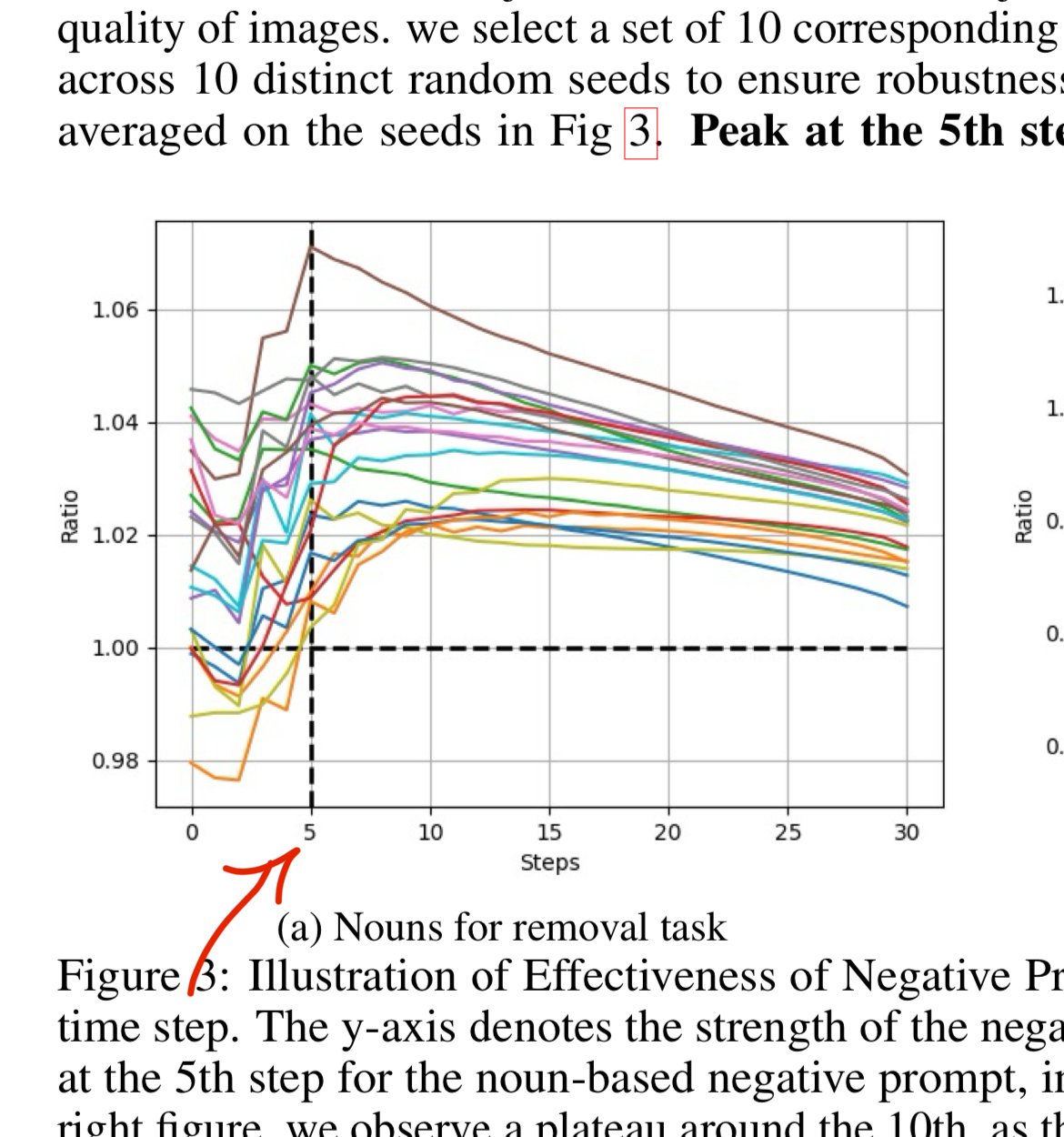

Read this: https://arxiv.org/abs/2406.02965

The tldr:

Negatives should be 'things that appear in the image' .

If you prompt a picture of a cat , then 'cat' or 'pet' can be useful items to place in the negative prompt.

Best elimination with adjective words is with 16.7% delay , by writing \[ : neg1 neg2 neg3 :0.167 \] instead of neg1 neg2 neg3

Best elimination for noun words is with 30% delay , by writing "\[ : neg1 neg2 neg3 :0.3 \]" in the negative prompt instead of "neg1 neg2 neg3 "

AdComfortable1514 2w ago • 100%

I appreciate you took the time to write a sincere question.

Kinda rude for people to downvote you.

AdComfortable1514 1mo ago • 100%

Simple and cool.

Florence 2 image captioning sounds interesting to use.

Do people know of any other image-to-text models (apart from CLIP) ?

Link : https://huggingface.co/spaces/Qwen/Qwen2.5 Background (posted today!) : https://qwenlm.github.io/blog/qwen2.5-llm/ //----// These were released today. I have 0% knowledge what this thing can do, other than it seems be a really good LLM.

AdComfortable1514 1mo ago • 100%

Wow , yeah I found a demo here: https://huggingface.co/spaces/Qwen/Qwen2.5

A whole host of LLM models seems to be released. Thanks for the tip!

I'll see if I can turn them into something useful 👍

AdComfortable1514 1mo ago • 100%

That's good to know. I'll try them out. Thanks.

Link : https://huggingface.co/datasets/codeShare/text-to-image-prompts/tree/main/Google%20Colab%20Notebooks Still have a ton of data I need to process and upload.

AdComfortable1514 1mo ago • 100%

Hmm. I mean the FLUX model looks good

, so there must maybe be some magic with the T5 ?

I have no clue, so any insights are welcome.

T5 Huggingface: https://huggingface.co/docs/transformers/model_doc/t5

T5 paper : https://arxiv.org/pdf/1910.10683

Any suggestions on what LLM i ought to use instead of T5?

AdComfortable1514 1mo ago • 100%

Good find! Fixed. It was well appreciated.

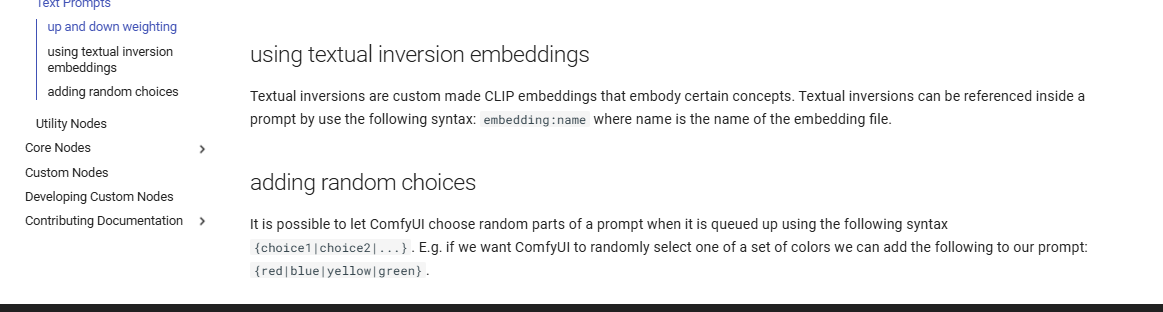

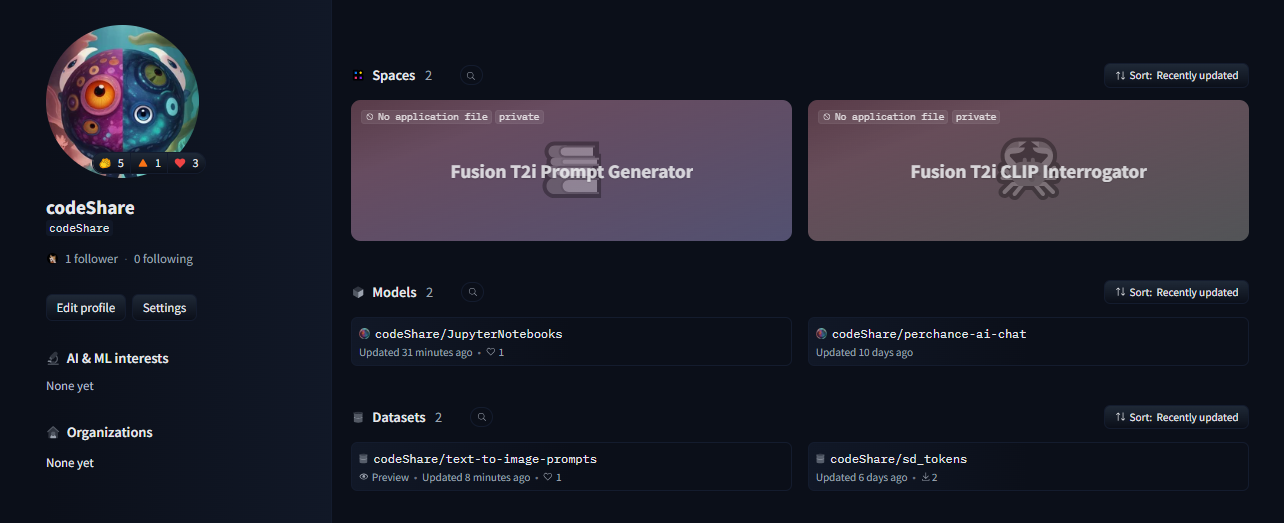

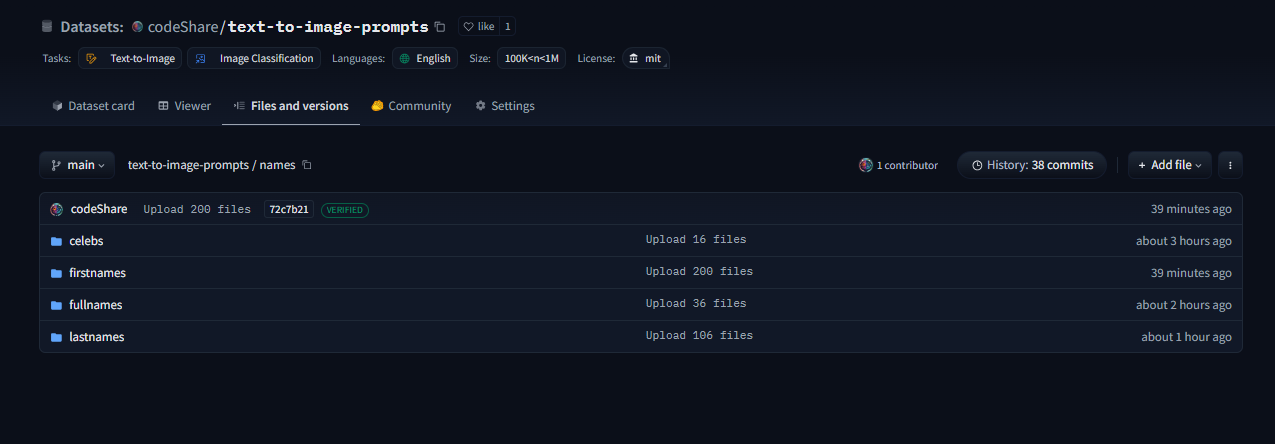

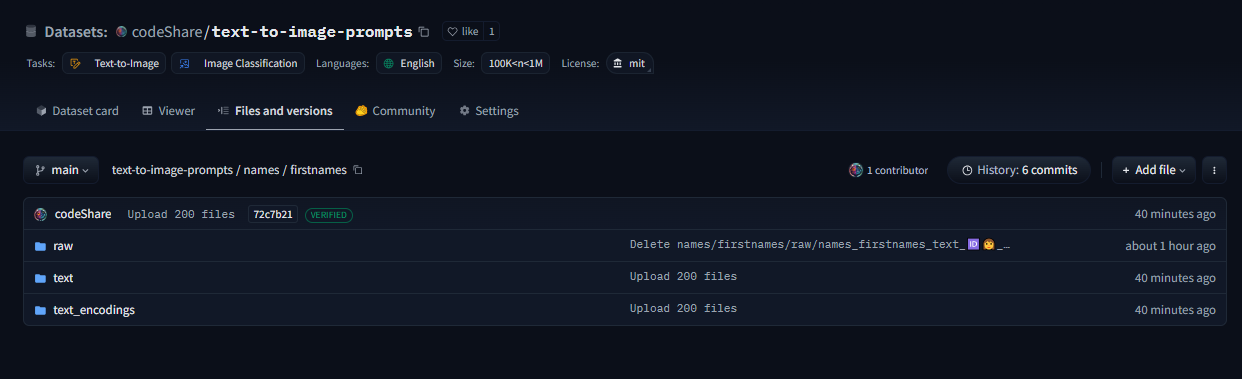

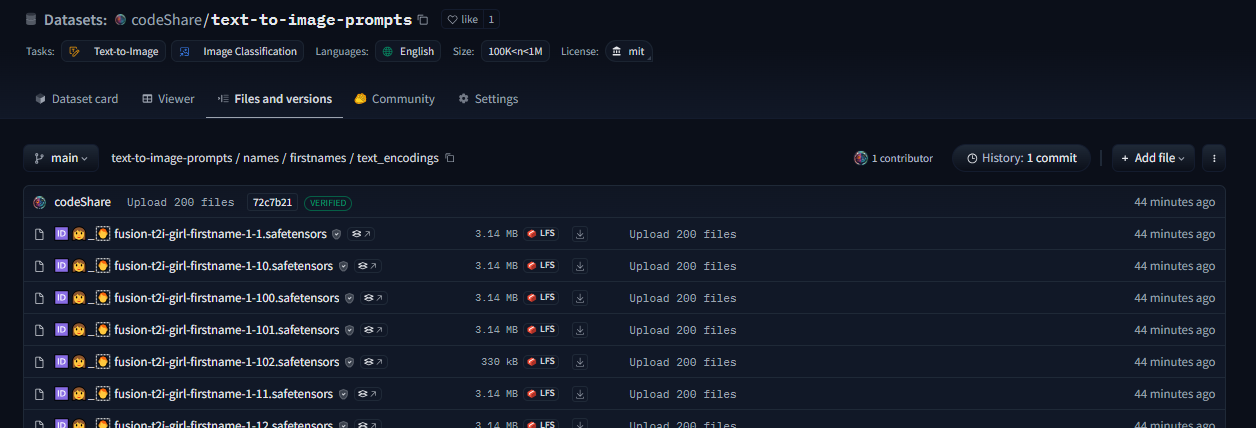

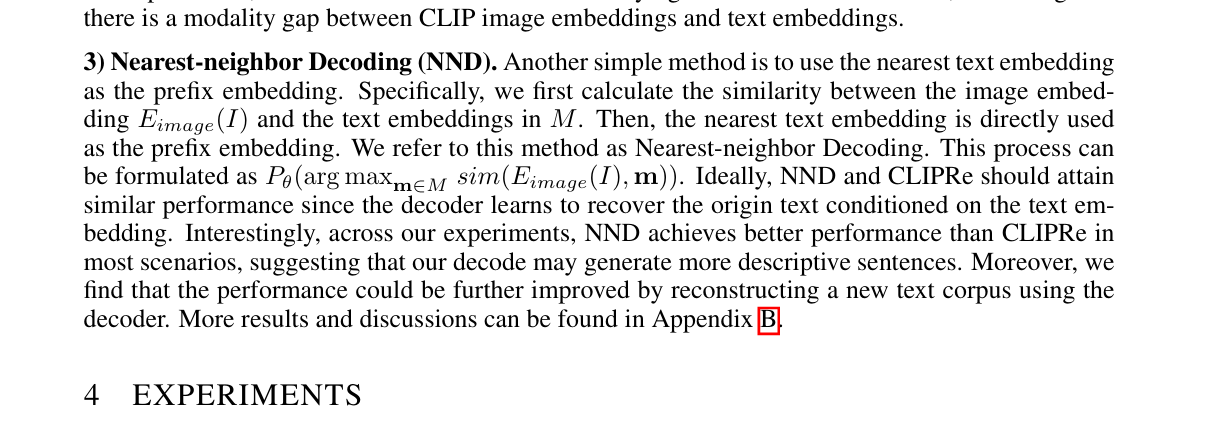

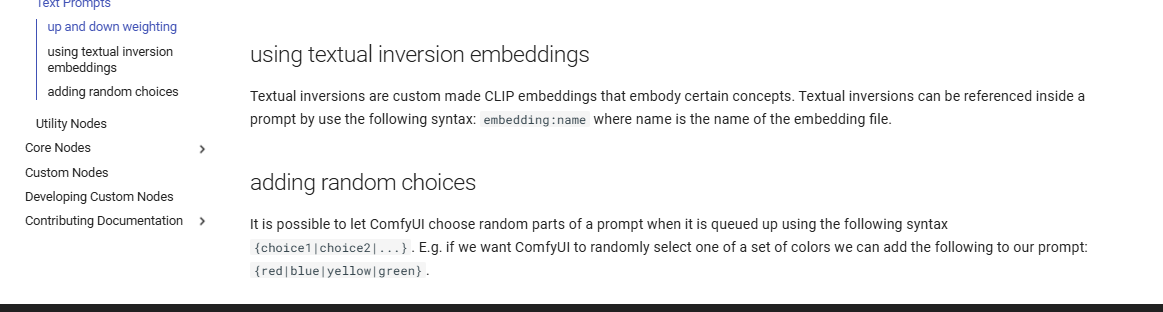

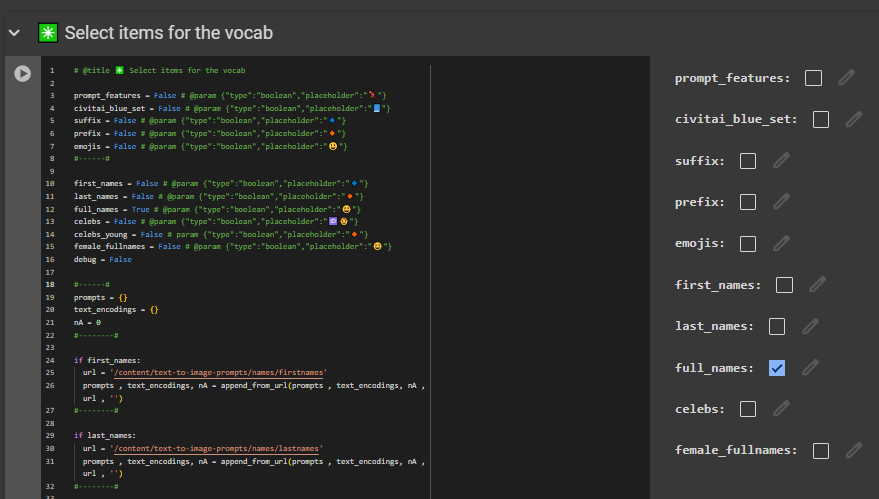

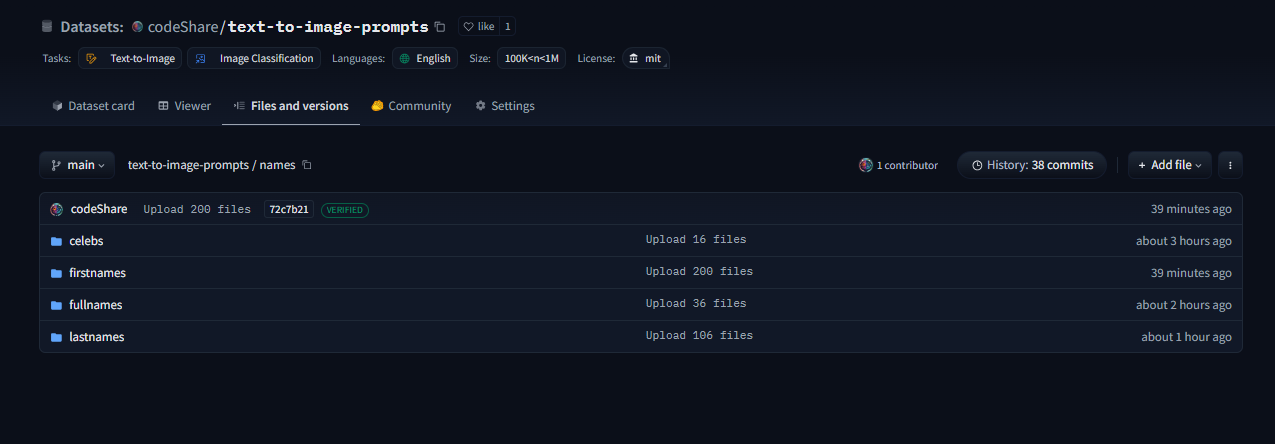

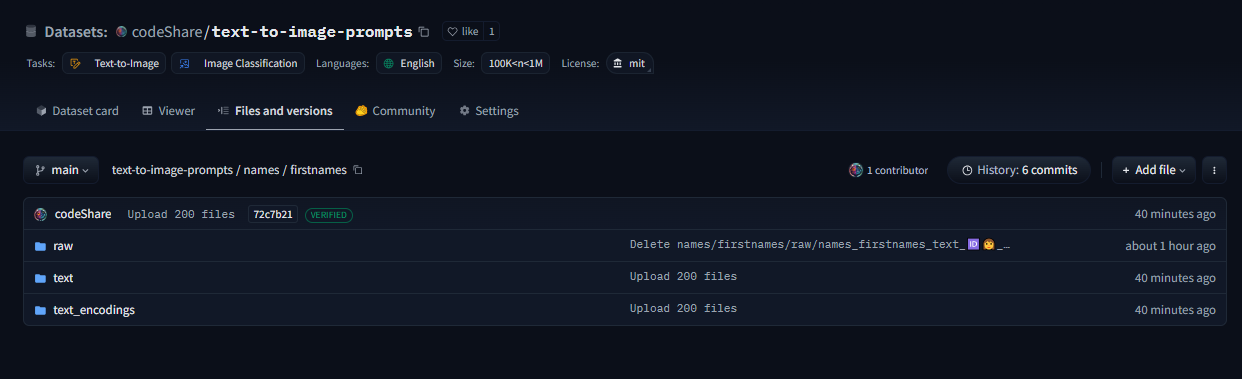

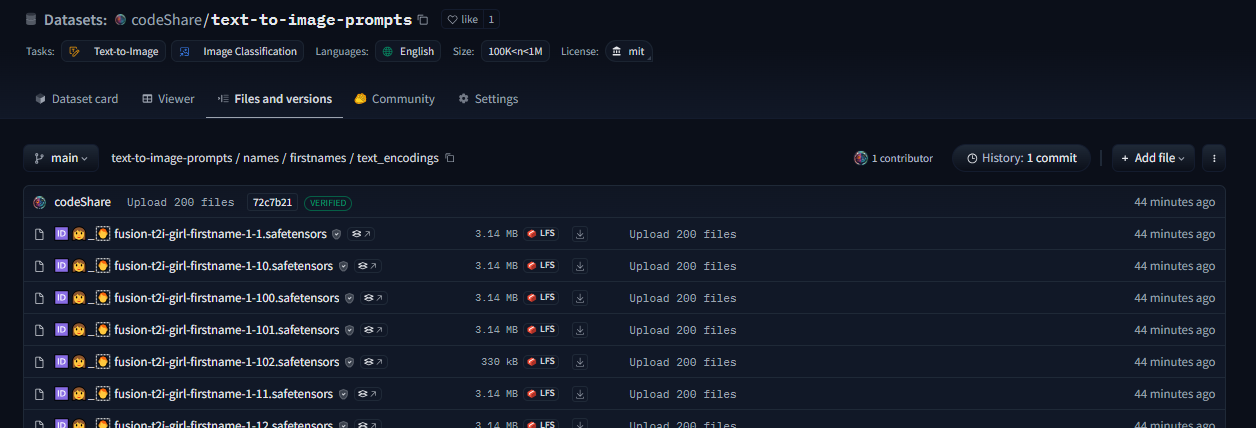

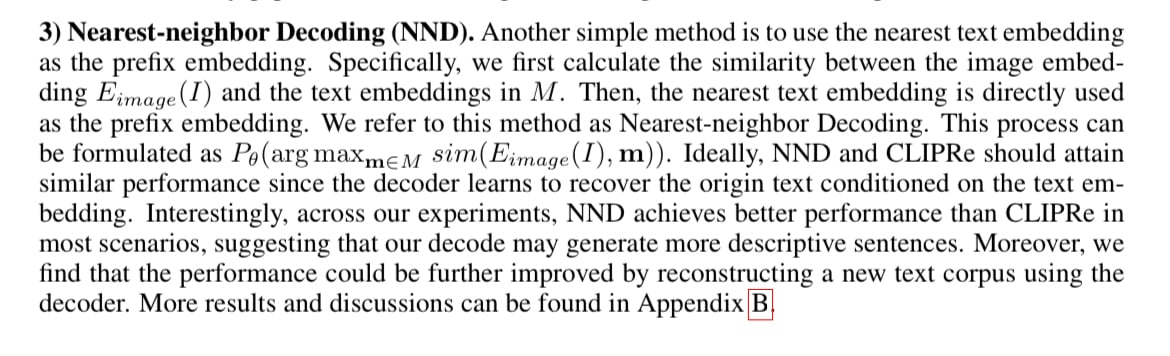

This post is a developer diary , kind of. I'm making an improved CLIP interrogator using nearest-neighbor decoding: https://huggingface.co/codeShare/JupyterNotebooks/blob/main/sd_token_similarity_calculator.ipynb , unlike the Pharmapsychotic model aka the "vanilla" CLIP interrogator : https://huggingface.co/spaces/pharmapsychotic/CLIP-Interrogator/discussions It doesn't require GPU to run, and is super quick. The reason for this is that the text_encodings are calculated ahead of time. I have plans on making this a Huggingface module. //----// This post gonna be a bit haphazard, but that's the way things are before I get the huggingface gradio module up and running. Then it can be a fancy "feature" post , but no clue when I will be able to code that. So better to give an update on the ad-hoc solution I have now. The NND method I'm using is described here , in this paper which presents various ways to improve CLIP Interrogators: https://arxiv.org/pdf/2303.03032  Easier to just use the notebook then follow this gibberish. We pre-encode a bunch of prompt items , then select the most similiar one using dot product. Thats the TLDR. Right now the resources available are the ones you see in the image. I'll try to showcase it at some point. But really , I'm mostly building this tool because it is very convenient for myself + a fun challenge to use CLIP. It's more complicated than the regular CLIP interrogator , but we get a whole bunch of items to select from , and can select exactly "how similiar" we want it to be to the target image/text encoding. The \{itemA|itemB|itemC\} format is used as this will select an item at random when used on the perchance text-to-image servers, in in which I have a generator where I'm using the full dataset , https://perchance.org/fusion-ai-image-generator NOTE: I've realized new users get errors when loading the fusion gen for the first time. It takes minutes to load a fraction of the sets from perchance servers before this generator is "up and running" so-to speak. I plan to migrate the database to a Huggingface repo to solve this : https://huggingface.co/datasets/codeShare/text-to-image-prompts The \{itemA|itemB|itemC\} format is also a build-in random selection feature on ComfyUI :  Source : https://blenderneko.github.io/ComfyUI-docs/Interface/Textprompts/#up-and-down-weighting Links/Resources posted here might be useful to someone in the meantime.  You can find tons of strange modules on the Huggingface page : https://huggingface.co/spaces text_encoding_converter (also in the NND notebook) : https://huggingface.co/codeShare/JupyterNotebooks/blob/main/indexed_text_encoding_converter.ipynb I'm using this to batch process JSON files into json + text_encoding paired files. Really useful (for me at least) when building the interrogator. Runs on the either Colab GPU or on Kaggle for added speed: https://www.kaggle.com/ Here is the dataset folder https://huggingface.co/datasets/codeShare/text-to-image-prompts:  Inside these folders you can see the auto-generated safetensor + json pairings in the "text" and "text_encodings" folders. The JSON file(s) of prompt items from which these were processed are in the "raw" folder.  The text_encodings are stored as safetensors. These all represent 100K female first names , with 1K items in each file. By splitting the files this way , it uses way less RAM / VRAM as lists of 1K can be processed one at a time.  I can process roughly 50K text encodings in about the time it takes to write this post (currently processing a set of 100K female firstnames into text encodings for the NND CLIP interrogator. ) EDIT : Here is the output uploaded https://huggingface.co/datasets/codeShare/text-to-image-prompts/tree/main/names/firstnames I've updated the notebook to include a similarity search for ~100K female firstnames , 100K lastnames and a randomized 36K mix of female firstnames + lastnames Its a JSON + safetensor pairing with 1K items in each. Inside the JSON is the name of the .safetensor files which it corresponds to. This system is super quick :)! I have plans on making the NND image interrogator a public resource on Huggingface later down the line, using these sets. Will likely use the repo for perchance imports as well: https://huggingface.co/datasets/codeShare/text-to-image-prompts **Sources for firstnames : https://huggingface.co/datasets/jbrazzy/baby_names** List of most popular names given to people in the US by year **Sources for lastnames : https://github.com/Debdut/names.io** An international list of all firstnames + lastnames in existance, pretty much . Kinda borked as it is biased towards non-western names. Haven't been able to filter this by nationality unfortunately. //----// The TLDR : You can run a prompt , or an image , to get the encoding from CLIP. Then sample above sets (of >400K items, at the moment) to get prompt items similiar to that thing.

Setting up some proper infrastructure to move the perchance sets onto Huggingface with text_encodings. This post is a developer diary , kind of. Its gonna be a bit haphazard, but that's the way things are before I get the huggingface gradio module up and running. The NND method is described here , in this paper which presents various ways to improve CLIP Interrogators: https://arxiv.org/pdf/2303.03032  Easier to just use the notebook then follow this gibberish. We pre-encode a bunch of prompt items , then select the most similiar one using dot product. Thats the TLDR. I'll try to showcase it at some point. But really , I'm mostly building this tool because it is very convenient for myself + a fun challenge to use CLIP. It's more complicated than the regular CLIP interrogator , but we get a whole bunch of items to select from , and can select exactly "how similiar" we want it to be to the target image/text encoding. The \{itemA|itemB|itemC\} format is used as this will select an item at random when used on the perchance text-to-image servers: https://perchance.org/fusion-ai-image-generator It is also a build-in random selection feature on ComfyUI , coincidentally :  Source : https://blenderneko.github.io/ComfyUI-docs/Interface/Textprompts/#up-and-down-weighting Links/Resources posted here might be useful to someone in the meantime.  You can find tons of strange modules on the Huggingface page : https://huggingface.co/spaces  For now you will have to make do with the NND CLIP Interrogator notebook : https://huggingface.co/codeShare/JupyterNotebooks/blob/main/sd_token_similarity_calculator.ipynb  text_encoding_converter (also in the NND notebook) : https://huggingface.co/codeShare/JupyterNotebooks/blob/main/indexed_text_encoding_converter.ipynb I'm using this to batch process JSON files into json + text_encoding paired files. Really useful (for me at least) when building the interrogator. Runs on the either Colab GPU or on Kaggle for added speed: https://www.kaggle.com/ Here is the dataset folder https://huggingface.co/datasets/codeShare/text-to-image-prompts:  Inside these folders you can see the auto-generated safetensor + json pairings in the "text" and "text_encodings" folders. The JSON file(s) of prompt items from which these were processed are in the "raw" folder.  The text_encodings are stored as safetensors. These all represent 100K female first names , with 1K items in each file. By splitting the files this way , it uses way less RAM / VRAM as lists of 1K can be processed one at a time.  //-----// Had some issues earlier with IDs not matching their embeddings but that should be resolved with this new established method I'm using. The hardest part is always getting the infrastructure in place. I can process roughly 50K text encodings in about the time it takes to write this post (currently processing a set of 100K female firstnames into text encodings for the NND CLIP interrogator. ) EDIT : Here is the output uploaded https://huggingface.co/datasets/codeShare/text-to-image-prompts/tree/main/names/firstnames I've updated the notebook to include a similarity search for ~100K female firstnames , 100K lastnames and a randomized 36K mix of female firstnames + lastnames **Sources for firstnames : https://huggingface.co/datasets/jbrazzy/baby_names** List of most popular names given to people in the US by year **Sources for lastnames : https://github.com/Debdut/names.io** An international list of all firstnames + lastnames in existance, pretty much . Kinda borked as it is biased towards non-western names. Haven't been able to filter this by nationality unfortunately. //------// Its a JSON + safetensor pairing with 1K items in each. Inside the JSON is the name of the .safetensor files which it corresponds to. This system is super quick :)! I plan on running a list of celebrities against the randomized list for firstnames + lastnames in order to create a list of fake "celebrities" that only exist in Stable Diffusion latent space. An "ethical" celebrity list, if you can call it that which have similiar text-encodings to real people but are not actually real names. I have plans on making the NND image interrogator a public resource on Huggingface later down the line, using these sets. Will likely use the repo for perchance imports as well: https://huggingface.co/datasets/codeShare/text-to-image-prompts

AdComfortable1514 1mo ago • 100%

Fair enough

AdComfortable1514 1mo ago • 100%

I get it. I hope you don't interpret this as arguing against results etc.

What I want to say is ,

If implemented correctly , same seed does give the same result for output for a given prompt.

If there is variation , then something in the pipeline must be approximating things.

This may be good (for performance) , or it may be bad.

You are 100% correct in highlighting this issue to the dev.

Though its not a legal document , or a science paper.

Just a guide to explain seeds to newbies.

Omitting non-essential information , for the sake of making the concept clearer , can be good too.

AdComfortable1514 1mo ago • 100%

Perchance dev is correct here Allo ;

the same seed will generate the exact same picture.

If you see variety , it will be due to factors outside the SD model. That stuff happens.

But it's good that you fact check stuff.

AdComfortable1514 1mo ago • 100%

Do you know where I can find documemtation on the perchance API?

Specifically createPerchanceTree ?

I need to know which functions there are , and what inputs/outputs they take.

prompt: "[#FTSA# : red carpet in background by architecture Tuymans and pani jaan antibody hopped bine users eternity archives :0.1]" https://perchance.org/fusion-ai-image-generator

AdComfortable1514 1mo ago • 100%

Thanks! I appreciate the support. Helps a lot to know where to start looking ( ; v ;)b!

Error disappears by updating any HTML element on the fusion gen page. Source: https://perchance.org/fusion-ai-image-generator Dynamic imports plugin: https://perchance.org/dynamic-import-plugin I let name = localStorage.name , if it exists , when running dynamicImports(name). I didn't have this error when I implemented the localStorage thingy. So I suspected this to be connected to some new added feature for dynamic Imports. Ideas on solving this? Code when I select names for dynamic import upon start (the error only occurs upon opening/reloading the page) : ``` _generator gen_danbooru fusion-t2i-danbooru-1 fusion-t2i-danbooru-2 fusion-t2i-danbooru-3 gen_lyrics fusion-t2i-lyrics-1 fusion-t2i-lyrics-2 ... _genKeys gen_danbooru gen_lyrics ... // Initialize getStartingValue(type) => _genKeys.selectAll.forEach(function(_key) { document[_key] = 'fusion-t2i-empty'; if (localStorage.getItem(_key) && localStorage.getItem(_key) != '' && localStorage.getItem(_key) != 'fusion-t2i-empty') { document[_key] = localStorage.getItem(_key); } else { document[_key] = [_generator[_key].selectOne]; localStorage.setItem(_key, document[_key]); }; }); ... dynamicImport(document.gen_danbooru, 'preload'); ... if (type == "danbooru"): return document.gen_danbooru; }; // End of getStartingValue(type) ... _folders danbooru = dynamicImport(document.gen_danbooru || getStartingValue("danbooru")) ```

Link: https://huggingface.co/datasets/codeShare/text-to-image-prompts/blob/main/README.md Will update with JSON + text_encoding pairs as I process the sub_generators

AdComfortable1514 1mo ago • 100%

Cool

I plan on making my prompt library more accessible. I'm adding this feature because I plan to build a Huggingface JSON repo of the contents of my sub-generators , which I plan to use for my image interrogator: https://huggingface.co/codeShare/JupyterNotebooks/blob/main/sd_token_similarity_calculator.ipynb Example: [https://perchance.org/fusion-t2i-prompt-features-5](https://perchance.org/fusion-t2i-prompt-features-5) List of currently updated generators can be found here: https://lemmy.world/post/19398527

This is an open ended question. I'm not looking for a specific answer , just what people know about this topic. I've asked this question on Huggingface discord as well. But hey, asking on lemmy is always good, right? No need to answer here. This is a repost, essentially. This might serve as an "update" of sorts from the previous post: https://lemmy.world/post/19509682 //---// Question; FLUX model uses a combo of CLIP+T5 to create a text_encoding. CLIP is capable if doing both image_encoding and text_encoding. T5 model seems to be strictly text-to-text. So I can't use the T5 to create image_encodings. Right? https://huggingface.co/docs/transformers/model_doc/t5 But nonetheless, the T5 encoder is used in text-to-image generation. So surely, there must be good uses for the T5 in creating a better CLIP interrogator? Ideas/examples on how to do this? I have 0% knowledge on the T5 , so feel free to just send me a link someplace if you don't want to type out an essay. //----// For context; I'm making my own version of a CLIP interrogator : https://colab.research.google.com/#fileId=https%3A//huggingface.co/codeShare/JupyterNotebooks/blob/main/sd_token_similarity_calculator.ipynb Key difference is that this one samples the CLIP-vit-large-patch14 tokens directly instead of using pre-written prompts. I text_encode the tokens individually , store them in a list for later use. I'm using the method shown in this paper, the "NND-Nearest neighbor decoding" .  Methods for making better CLIP interrogators: https://arxiv.org/pdf/2303.03032 T5 encoder paper : https://arxiv.org/pdf/1910.10683 Example from the notebook where I'm using the NND method on 49K CLIP tokens (Roman girl image) :  Most similiar suffix tokens : "{vfx |cleanup |warcraft |defend |avatar |wall |blu |indigo |dfs |bluetooth |orian |alliance |defence |defenses |defense |guardians |descendants |navis |raid |avengersendgame }" most similiar prefix tokens : "{imperi-|blue-|bluec-|war-|blau-|veer-|blu-|vau-|bloo-|taun-|kavan-|kair-|storm-|anarch-|purple-|honor-|spartan-|swar-|raun-|andor-}"

AdComfortable1514 1mo ago • 100%

New stuff

Paper: https://arxiv.org/abs/2303.03032

Takes only a few seconds to calculate.

Most similiar suffix tokens : "{vfx |cleanup |warcraft |defend |avatar |wall |blu |indigo |dfs |bluetooth |orian |alliance |defence |defenses |defense |guardians |descendants |navis |raid |avengersendgame }"

most similiar prefix tokens : "{imperi-|blue-|bluec-|war-|blau-|veer-|blu-|vau-|bloo-|taun-|kavan-|kair-|storm-|anarch-|purple-|honor-|spartan-|swar-|raun-|andor-}"

AdComfortable1514 1mo ago • 100%

New stuff

Paper: https://arxiv.org/abs/2303.03032

Takes only a few seconds to calculate.

Most similiar suffix tokens : "{vfx |cleanup |warcraft |defend |avatar |wall |blu |indigo |dfs |bluetooth |orian |alliance |defence |defenses |defense |guardians |descendants |navis |raid |avengersendgame }"

most similiar prefix tokens : "{imperi-|blue-|bluec-|war-|blau-|veer-|blu-|vau-|bloo-|taun-|kavan-|kair-|storm-|anarch-|purple-|honor-|spartan-|swar-|raun-|andor-}"

AdComfortable1514 1mo ago • 100%

I count casualty_rate = number_shot / (number_shot + number_subdued)

Which in this case is 22/64 = 34% casualty rate for civilians

and 98/131 = 75% casualty rate for police

AdComfortable1514 1mo ago • 85%

So its 64-131 between work done by bystanders vs. work done by police?

And casualty rate is actually lower for bystanders doing the work (with their guns) than the police?

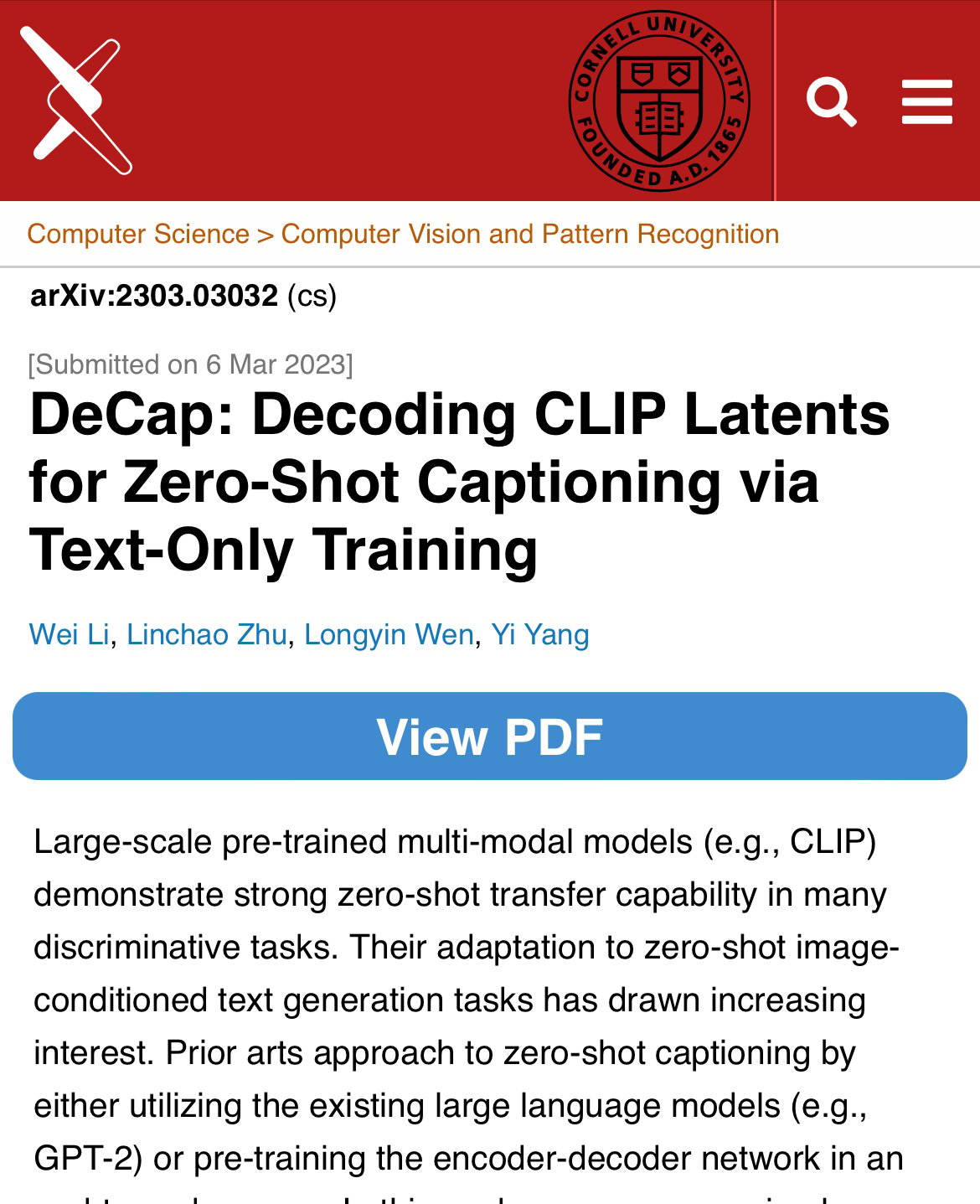

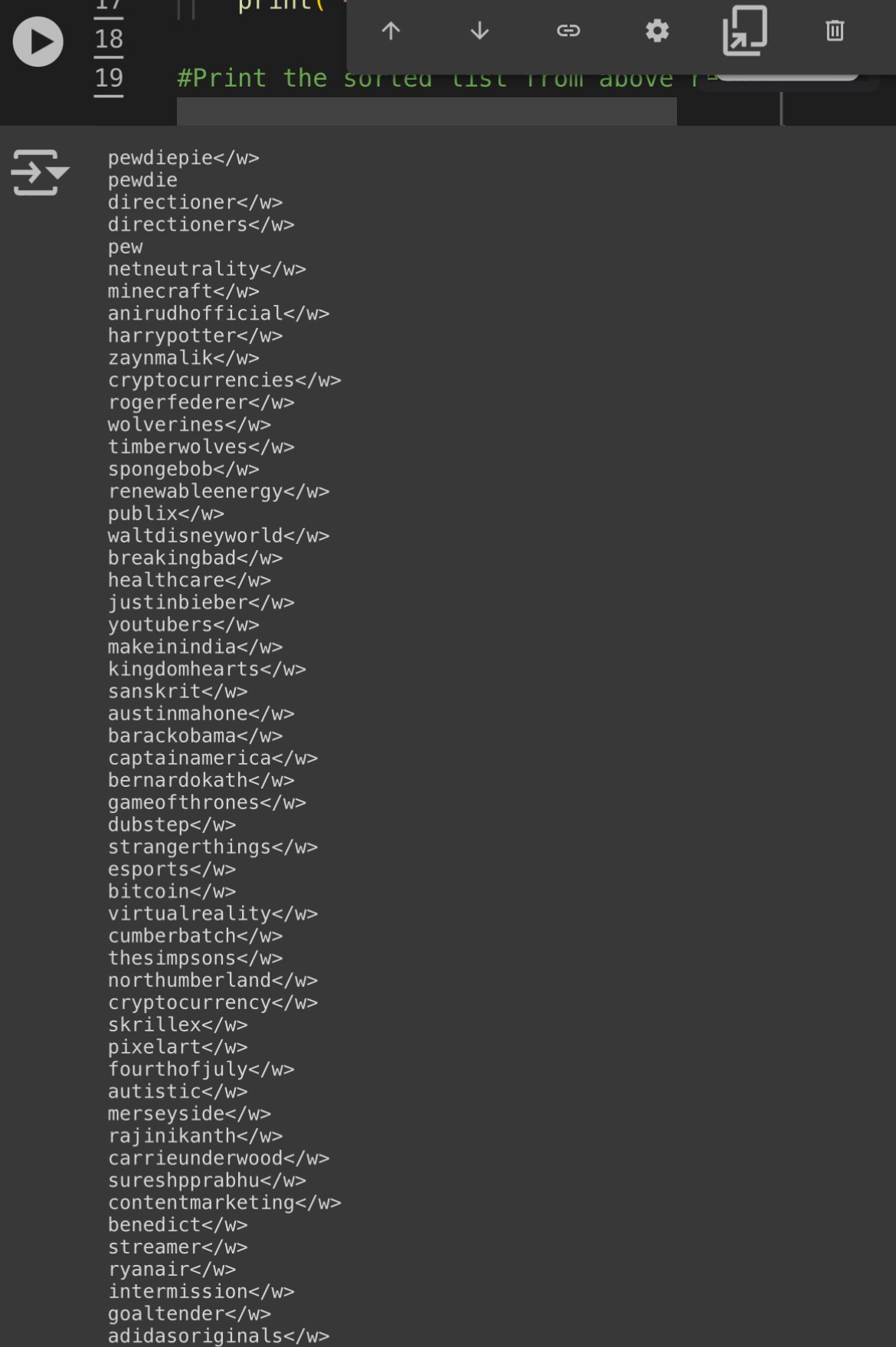

Created by me. Link : https://huggingface.co/codeShare/JupyterNotebooks/blob/main/sd_token_similarity_calculator.ipynb # How does this work? Similiar vectors = similiar output in the SD 1.5 / SDXL / FLUX model CLIP converts the prompt text to vectors (“tensors”) , with float32 values usually ranging from -1 to 1. Dimensions are \[ 1x768 ] tensors for SD 1.5 , and a \[ 1x768 , 1x1024 ] tensor for SDXL and FLUX. The SD models and FLUX converts these vectors to an image. This notebook takes an input string , tokenizes it and matches the first token against the 49407 token vectors in the vocab.json : [https://huggingface.co/black-forest-labs/FLUX.1-dev/tree/main/tokenizer](https://www.google.com/url?q=https%3A%2F%2Fhuggingface.co%2Fblack-forest-labs%2FFLUX.1-dev%2Ftree%2Fmain%2Ftokenizer) It finds the “most similiar tokens” in the list. Similarity is the theta angle between the token vectors.  The angle is calculated using cosine similarity , where 1 = 100% similarity (parallell vectors) , and 0 = 0% similarity (perpendicular vectors). Negative similarity is also possible. # How can I use it? If you are bored of prompting “girl” and want something similiar you can run this notebook and use the “chick” token at 21.88% similarity , for example You can also run a mixed search , like “cute+girl”/2 , where for example “kpop” has a 16.71% similarity There are some strange tokens further down the list you go. Example: tokens similiar to the token "pewdiepie</w>" (yes this is an actual token that exists in CLIP)  Each of these correspond to a unique 1x768 token vector. The higher the ID value , the less often the token appeared in the CLIP training data. To reiterate; this is the CLIP model training data , not the SD-model training data. So for certain models , tokens with high ID can give very consistent results , if the SD model is trained to handle them. Example of this can be anime models , where japanese artist names can affect the output greatly. Tokens with high ID will often give the "fun" output when used in very short prompts. # What about token vector length? If you are wondering about token magnitude, Prompt weights like (banana:1.2) will scale the magnitude of the corresponding 1x768 tensor(s) by 1.2 . So thats how prompt token magnitude works. Source: [https://huggingface.co/docs/diffusers/main/en/using-diffusers/weighted\_prompts](https://www.google.com/url?q=https%3A%2F%2Fhuggingface.co%2Fdocs%2Fdiffusers%2Fmain%2Fen%2Fusing-diffusers%2Fweighted_prompts)\* So TLDR; vector direction = “what to generate” , vector magnitude = “prompt weights” # How prompting works (technical summary) 1. There is no correct way to prompt. 2. Stable diffusion reads your prompt left to right, one token at a time, finding association _from_ the previous token _to_ the current token _and to_ the image generated thus far (Cross Attention Rule) 3. Stable Diffusion is an optimization problem that seeks to maximize similarity to prompt and minimize similarity to negatives (Optimization Rule) Reference material (covers entire SD , so not good source material really, but the info is there) : https://youtu.be/sFztPP9qPRc?si=ge2Ty7wnpPGmB0gi # The SD pipeline For every step (20 in total by default) for SD1.5 : 1. Prompt text => (tokenizer) 2. => Nx768 token vectors =>(CLIP model) => 3. 1x768 encoding => ( the SD model / Unet ) => 4. => _Desired_ image per Rule 3 => ( sampler) 5. => Paint a section of the image => (image) # Disclaimer /Trivia This notebook should be seen as a "dictionary search tool" for the vocab.json , which is the same for SD1.5 , SDXL and FLUX. Feel free to verify this by checking the 'tokenizer' folder under each model. vocab.json in the FLUX model , for example (1 of 2 copies) : https://huggingface.co/black-forest-labs/FLUX.1-dev/tree/main/tokenizer I'm using Clip-vit-large-patch14 , which is used in SD 1.5 , and is one among the two tokenizers for SDXL and FLUX : https://huggingface.co/openai/clip-vit-large-patch14/blob/main/README.md This set of tokens has dimension 1x768. SDXL and FLUX uses an additional set of tokens of dimension 1x1024. These are not included in this notebook. Feel free to include them yourselves (I would appreciate that). To do so, you will have to download a FLUX and/or SDXL model , and copy the 49407x1024 tensor list that is stored within the model and then save it as a .pt file. //---// I am aware it is actually the 1x768 text_encoding being processed into an image for the SD models + FLUX. As such , I've included text_encoding comparison at the bottom of the Notebook. I am also aware thar SDXL and FLUX uses additional encodings , which are not included in this notebook. * Clip-vit-bigG for SDXL: https://huggingface.co/laion/CLIP-ViT-bigG-14-laion2B-39B-b160k/blob/main/README.md * And the T5 text encoder for FLUX. I have 0% understanding of FLUX T5 text_encoder. //---// If you want them , feel free to include them yourself and share the results (cuz I probably won't) :)! That being said , being an encoding , I reckon the CLIP Nx768 => 1x768 should be "linear" (or whatever one might call it) So exchange a few tokens in the Nx768 for something similiar , and the resulting 1x768 ought to be kinda similar to 1x768 we had earlier. Hopefully. I feel its important to mention this , in case some wonder why the token-token similarity don't match the text-encoding to text-encoding similarity. # Note regarding CLIP text encoding vs. token *To make this disclaimer clear; Token-to-token similarity is not the same as text_encoding similarity.* I have to say this , since it will otherwise get (even more) confusing , as both the individual tokens , and the text_encoding have dimensions 1x768. They are separate things. Separate results. etc. As such , you will not get anything useful if you start comparing similarity between a token , and a text-encoding. So don't do that :)! # What about the CLIP image encoding? The CLIP model can also do an image_encoding of an image, where the output will be a 1x768 tensor. These _can_ be compared with the text_encoding. Comparing CLIP image_encoding with the CLIP text_encoding for a bunch of random prompts until you find the "highest similarity" , is a method used in the CLIP interrogator : https://huggingface.co/spaces/pharmapsychotic/CLIP-Interrogator List of random prompts for CLIP interrogator can be found here, for reference : https://github.com/pharmapsychotic/clip-interrogator/tree/main/clip_interrogator/data The CLIP image_encoding is not included in this Notebook. If you spot errors / ideas for improvememts; feel free to fix the code in your own notebook and post the results. I'd appreciate that over people saying "your math is wrong you n00b!" with no constructive feedback. //---// Regarding output # What are the </w> symbols? The whitespace symbol indicate if the tokenized item ends with whitespace ( the suffix "banana</w>" => "banana " ) or not (the prefix "post" in "post-apocalyptic ") For ease of reference , I call them prefix-tokens and suffix-tokens. Sidenote: Prefix tokens have the unique property in that they "mutate" suffix tokens Example: "photo of a #prefix#-banana" where #prefix# is a randomly selected prefix-token from the vocab.json The hyphen "-" exists to guarantee the tokenized text splits into the written #prefix# and #suffix# token respectively. The "-" hypen symbol can be replaced by any other special character of your choosing. Capital letters work too , e.g "photo of a #prefix#Abanana" since the capital letters A-Z are only listed once in the entire vocab.json. You can also choose to omit any separator and just rawdog it with the prompt "photo of a #prefix#banana" , however know that this may , on occasion , be tokenized as completely different tokens of lower ID:s. Curiously , common NSFW terms found online have in the CLIP model have been purposefully fragmented into separate #prefix# and #suffix# counterparts in the vocab.json. Likely for PR-reasons. You can verify the results using this online tokenizer: https://sd-tokenizer.rocker.boo/    # What is that gibberish tokens that show up? The gibberish tokens like "ðŁĺħ\</w>" are actually emojis! Try writing some emojis in this online tokenizer to see the results: https://sd-tokenizer.rocker.boo/ It is a bit borked as it can't process capital letters properly. Also note that this is not reversible. If tokenization "😅" => ðŁĺħ</w> Then you can't prompt "ðŁĺħ" and expect to get the same result as the tokenized original emoji , "😅". SD 1.5 models actually have training for Emojis. But you have to set CLIP skip to 1 for this to work is intended. For example, this is the result from "photo of a 🧔🏻♂️"  A tutorial on stuff you can do with the vocab.list concluded. Anyways, have fun with the notebook. There might be some updates in the future with features not mentioned here. //---//

Coded it myself in Google Colab. Quite cool if I say so myself Link: https://huggingface.co/codeShare/JupyterNotebooks/blob/main/sd_token_similarity_calculator.ipynb

Will update this post with links of stuff I have from the "# cool-finds" on the fusion gen discord: https://discord.gg/exBKyyrbtG I use that space to yeet links that might be useful. I will try to organize the links here a few items at a time. //---// Cover image prompt "\[ #FTSA# : "These are real in long pleated skirt and bangs standing in ruined city Monegasque by ilya kushinova they are all parc. Pretty cute , huh? (leigh cartoon dari courtney-anime wrath art style :0.3) green crowded mountains and roots unique visual effect intricate futuristic hair behind ear hyper realistic5 angry evil : 0.1\] " //----// # Prompt syntax Perchance prompt syntax: https://perchance.org/prompt-guide A111 wiki : https://github.com/AUTOMATIC1111/stable-diffusion-webui/wiki/Features Prompt parser.py : https://github.com/AUTOMATIC1111/stable-diffusion-webui/blob/master/modules/prompt_parser.py # Image Interrogators Converts an image to a prompt Pharmapsychotic (most popular one) : https://huggingface.co/spaces/pharmapsychotic/CLIP-Interrogator Danbooru tags : https://huggingface.co/spaces/hysts/DeepDanbooru # How Stable Diffusion prompts works Just good, technical source material on the "prompt text" => image output works. https://huggingface.co/docs/diffusers/main/en/using-diffusers/weighted_prompts https://arxiv.org/abs/2406.02965 This video explains cross-attention : https://youtu.be/sFztPP9qPRc?si=jhoupp4rPfJshj8V Sampler guide: https://stablediffusionweb.com/blog/stable-diffusion-samplers:-a-comprehensive-guide # AI chat Audio SFX/ voice lines : https://www.sounds-resource.com/ https://youtube.com/@soundmefreelyyt?si=yjUPqUVJA7JmUXQC Lorebooks : https://www.chub.ai/ # Online Tokenizer https://sd-tokenizer.rocker.boo/ # The Civitai prompt set In a separate category because of how useful it is. The best/largest set of prompts for SD that can be found online , assuming you can find a way to filter out all the "garbage" prompts. Has a lot if NSFW items. https://huggingface.co/datasets/AdamCodd/Civitai-8m-prompts The set is massive so I advice using Google colab to avoid filling up your entire harddrive with the .txt documents I've split a part of the set into more managable 500MB chunks for text processing : https://huggingface.co/codeShare/JupyterNotebooks/tree/main # Prompt Styles People who have crammed different artists / styles into SD 1.5 and/or SDXL and made a list of what "sticks" , and writtend the results in lists. https://lightroom.adobe.com/shares/e02b386129f444a7ab420cb28798c6b6 https://cheatsheet.strea.ly/ https://github.com/proximasan/sdxl_artist_styles_studies https://huggingface.co/spaces/terrariyum/SDXL-artists-browser https://docs.google.com/spreadsheets/d/1_jgQ9SyvUaBNP1mHHEzZ6HhL_Es1KwBKQtnpnmWW82I/htmlview#gid=1637207356 https://weirdwonderfulai.art/resources/stable-diffusion-xl-sdxl-art-medium/ https://rikkar69.github.io/SDXL-artist-study/ https://medium.com/@soapsudtycoon/stable-diffusion-trending-on-art-station-and-other-myths-c09b09084e33 https://docs.google.com/spreadsheets/u/0/d/1SRqJ7F_6yHVSOeCi3U82aA448TqEGrUlRrLLZ51abLg/htmlview https://stable-diffusion-art.com/illustrated-guide/ https://rentry.org/artists_sd-v1-4 https://aiartes.com/ https://stablediffusion.fr/artists https://proximacentaurib.notion.site/e28a4f8d97724f14a784a538b8589e7d?v=42948fd8f45c4d47a0edfc4b78937474 https://sdxl.parrotzone.art/ https://www.shruggingface.com/blog/blending-artist-styles-together-with-stable-diffusion-and-lora # 3 Rules of prompting 1. There is no correct way to prompt. 2. Stable diffusion reads your prompt left to right, one token at a time, finding association _from_ the previous token _to_ the current token _and to_ the image generated thus far (Cross Attention Rule) 3. Stable Diffusion is an optimization problem that seeks to maximize similarity to prompt and minimize similarity to negatives (Optimization Rule) # The SD pipeline For every step (20 in total by default) : 1. Prompt text => (tokenizer) 2. => Nx768 token vectors =>(CLIP model) => 3. 1x768 encoding => ( the SD model / Unet ) => 4. => _Desired_ image per Rule 3 => ( sampler) 5. => Paint a section of the image => (image) # Latent space properties Weights for token A = assigns magnitude value to be multiplied with the 1x768 token vector A. By default 1. Direction of token A = The theta angle between tokens A and B is equivalent to similarity between A and B. Calculated as the normalized dot product between A and B (cosine similarity). # CLIP properties (used in SD 1.5 , SDXL and FLUX) The vocab.json = a list of 47K tokens of fixed value which corresponds to english words , or fragments of english words. ID of token A = the lower the ID , the more "fungible" A is in the prompt. The higher the ID , the more "niche" the training data for token A will be # Perchance sub-generators (text-to-image) The following generators contain prompt items which you may use for your own T2i projects. These ones are recently updated to allow you to download their contents as a JSON file. I'm writing these here to keep track of generator that are updated vs. non-updated. For the full list of available datasets , scroll through the code on the fusion gen : [https://perchance.org/fusion-ai-image-generator](https://perchance.org/fusion-ai-image-generator) //---// [https://perchance.org/fusion-t2i-prompt-features-1](https://perchance.org/fusion-t2i-prompt-features-1) [https://perchance.org/fusion-t2i-prompt-features-2](https://perchance.org/fusion-t2i-prompt-features-2) [https://perchance.org/fusion-t2i-prompt-features-3](https://perchance.org/fusion-t2i-prompt-features-3) [https://perchance.org/fusion-t2i-prompt-features-4](https://perchance.org/fusion-t2i-prompt-features-4) [https://perchance.org/fusion-t2i-prompt-features-5](https://perchance.org/fusion-t2i-prompt-features-5) [https://perchance.org/fusion-t2i-prompt-features-6](https://perchance.org/fusion-t2i-prompt-features-6) [https://perchance.org/fusion-t2i-prompt-features-7](https://perchance.org/fusion-t2i-prompt-features-7) [https://perchance.org/fusion-t2i-prompt-features-8](https://perchance.org/fusion-t2i-prompt-features-8) [https://perchance.org/fusion-t2i-prompt-features-9](https://perchance.org/fusion-t2i-prompt-features-9) [https://perchance.org/fusion-t2i-prompt-features-10](https://perchance.org/fusion-t2i-prompt-features-10) [https://perchance.org/fusion-t2i-prompt-features-11](https://perchance.org/fusion-t2i-prompt-features-11) [https://perchance.org/fusion-t2i-prompt-features-12](https://perchance.org/fusion-t2i-prompt-features-12) [https://perchance.org/fusion-t2i-prompt-features-13](https://perchance.org/fusion-t2i-prompt-features-13) [https://perchance.org/fusion-t2i-prompt-features-14](https://perchance.org/fusion-t2i-prompt-features-14) [https://perchance.org/fusion-t2i-prompt-features-15](https://perchance.org/fusion-t2i-prompt-features-15) [https://perchance.org/fusion-t2i-prompt-features-16](https://perchance.org/fusion-t2i-prompt-features-16) [https://perchance.org/fusion-t2i-prompt-features-17](https://perchance.org/fusion-t2i-prompt-features-17) [https://perchance.org/fusion-t2i-prompt-features-18](https://perchance.org/fusion-t2i-prompt-features-18) [https://perchance.org/fusion-t2i-prompt-features-19](https://perchance.org/fusion-t2i-prompt-features-19) [https://perchance.org/fusion-t2i-prompt-features-20](https://perchance.org/fusion-t2i-prompt-features-20) (copy of fusion-t2i-prompt-features-1) [https://perchance.org/fusion-t2i-prompt-features-21](https://perchance.org/fusion-t2i-prompt-features-21) [https://perchance.org/fusion-t2i-prompt-features-22](https://perchance.org/fusion-t2i-prompt-features-22) [https://perchance.org/fusion-t2i-prompt-features-23](https://perchance.org/fusion-t2i-prompt-features-23) [https://perchance.org/fusion-t2i-prompt-features-24](https://perchance.org/fusion-t2i-prompt-features-24) [https://perchance.org/fusion-t2i-prompt-features-25](https://perchance.org/fusion-t2i-prompt-features-25) [https://perchance.org/fusion-t2i-prompt-features-26](https://perchance.org/fusion-t2i-prompt-features-26) [https://perchance.org/fusion-t2i-prompt-features-27](https://perchance.org/fusion-t2i-prompt-features-27) [https://perchance.org/fusion-t2i-prompt-features-28](https://perchance.org/fusion-t2i-prompt-features-28) [https://perchance.org/fusion-t2i-prompt-features-29](https://perchance.org/fusion-t2i-prompt-features-29) [https://perchance.org/fusion-t2i-prompt-features-30](https://perchance.org/fusion-t2i-prompt-features-30) [https://perchance.org/fusion-t2i-prompt-features-31](https://perchance.org/fusion-t2i-prompt-features-31) [https://perchance.org/fusion-t2i-prompt-features-32](https://perchance.org/fusion-t2i-prompt-features-32) [https://perchance.org/fusion-t2i-prompt-features-33](https://perchance.org/fusion-t2i-prompt-features-33) [https://perchance.org/fusion-t2i-prompt-features-34](https://perchance.org/fusion-t2i-prompt-features-34) //----//

Bloomberg interview: https://youtu.be/tZCAvME-a98?si=OTcjxhE8iJfdo_lU This is California San Francisco , "the hub" of all the major tech giants. SB 1047 ('restrict commercial use of harmful AI models in California') partisan bill (Democrat 4-0) proposed by San Francisco senator Scott Weiner : https://legiscan.com/CA/text/SB1047/2023

Source:https://legiscan.com/CA/text/AB3211/id/2984195 # Clarifications (l) “Provenance data” means data that identifies the origins of synthetic content, including, but not limited to, the following: (1) The name of the generative AI provider. (2) The name and version number of the AI system that generated the content. (3) The time and date of the creation. (4) The portions of content that are synthetic. (m) “Synthetic content” means information, including images, videos, audio, and text, that has been produced or significantly modified by a generative AI system. (n) “Watermark” means information that is embedded into a generative AI system’s output for the purpose of conveying its synthetic nature, identity, provenance, history of modifications, or history of conveyance. (o) “Watermark decoders” means freely available software tools or online services that can read or interpret watermarks and output the provenance data embedded in them. # AI Generative services obligations (a) A generative AI provider shall do all of the following: (1) Place imperceptible and maximally indelible watermarks containing provenance data into synthetic content produced or significantly modified by a generative AI system that the provider makes available. (A) If a sample of synthetic content is too small to contain the required provenance data, the provider shall, at minimum, attempt to embed watermarking information that identifies the content as synthetic and provide the following provenance information in order of priority, with clause (i) being the most important, and clause (iv) being the least important: (i) The name of the generative AI provider. (ii) The name and version number of the AI system that generated the content. (iii) The time and date of the creation of the content. (iv) If applicable, the specific portions of the content that are synthetic. # Use of watermarks (B) To the greatest extent possible, watermarks shall be designed to retain information that identifies content as synthetic and gives the name of the provider in the event that a sample of synthetic content is corrupted, downscaled, cropped, or otherwise damaged. (2) Develop downloadable watermark decoders that allow a user to determine whether a piece of content was created with the provider’s system, and make those tools available to the public. (A) The watermark decoders shall be easy to use by individuals seeking to quickly assess the provenance of a single piece of content. (B) The watermark decoders shall adhere, to the greatest extent possible, to relevant national or international standards. (3) Conduct AI red-teaming exercises involving third-party experts to test whether watermarks can be easily removed from synthetic content produced by the provider’s generative AI systems, as well as whether the provider’s generative AI systems can be used to falsely add watermarks to otherwise authentic content. Red-teaming exercises shall be conducted before the release of any new generative AI system and annually thereafter. (b) A generative AI provider may continue to make available a generative AI system that was made available before the date upon which this act takes effect and that does not have watermarking capabilities as described by paragraph (1) of subdivision (a), if either of the following conditions are met: (1) The provider is able to retroactively create and make publicly available a decoder that accurately determines whether a given piece of content was produced by the provider’s system with at least 99 percent accuracy as measured by an independent auditor. (c) Providers and distributors of software and online services shall not make available a system, application, tool, or service that is designed to remove watermarks from synthetic content. (d) Generative AI hosting platforms shall not make available a generative AI system that does not place maximally indelible watermarks containing provenance data into content created by the system. # AI Text Chat LLMs (f) (1) A conversational AI system shall clearly and prominently disclose to users that the conversational AI system generates synthetic content. (A) In visual interfaces, including, but not limited to, text chats or video calling, a conversational AI system shall place the disclosure required under this subdivision in the interface itself and maintain the disclosure’s visibility in a prominent location throughout any interaction with the interface. (B) In audio-only interfaces, including, but not limited to, phone or other voice calling systems, a conversational AI system shall verbally make the disclosure required under this subdivision at the beginning and end of a call. (2) In all conversational interfaces of a conversational AI system, the conversational AI system shall, at the beginning of a user’s interaction with the system, obtain a user’s affirmative consent acknowledging that the user has been informed that they are interacting with a conversational AI system. A conversational AI system shall obtain a user’s affirmative consent prior to beginning the conversation. (4) The requirements under this subdivision shall not apply to conversational AI systems that do not produce inauthentic content. # 'Add Authenticity watermark to all cameras' (a) For purposes of this section, the following definitions apply: (1) “Authenticity watermark” means a watermark of authentic content that includes the name of the device manufacturer. (2) “Camera and recording device manufacturer” means the makers of a device that can record photographic, audio, or video content, including, but not limited to, video and still photography cameras, mobile phones with built-in cameras or microphones, and voice recorders. (3) “Provenance watermark” means a watermark of authentic content that includes details about the content, including, but not limited to, the time and date of production, the name of the user, details about the device, and a digital signature. (b) (1) Beginning January 1, 2026, newly manufactured digital cameras and recording devices sold, offered for sale, or distributed in California shall offer users the option to place an authenticity watermark and provenance watermark in the content produced by that device. (2) A user shall have the option to remove the authenticity and provenance watermarks from the content produced by their device. (3) Authenticity watermarks shall be turned on by default, while provenance watermarks shall be turned off by default. # How to demonstrate use Beginning March 1, 2025, a large online platform shall use labels to prominently disclose the provenance data found in watermarks or digital signatures in content distributed to users on its platforms. (1) The labels shall indicate whether content is fully synthetic, partially synthetic, authentic, authentic with minor modifications, or does not contain a watermark. (2) A user shall be able to click or tap on a label to inspect provenance data in an easy-to-understand format. (b) The disclosure required under subdivision (a) shall be readily legible to an average viewer or, if the content is in audio format, shall be clearly audible. A disclosure in audio content shall occur at the beginning and end of a piece of content and shall be presented in a prominent manner and at a comparable volume and speaking cadence as other spoken words in the content. A disclosure in video content should be legible for the full duration of the video. (c) A large online platform shall use state-of-the-art techniques to detect and label synthetic content that has had watermarks removed or that was produced by generative AI systems without watermarking functionality. (d) (1) A large online platform shall require a user that uploads or distributes content on its platform to disclose whether the content is synthetic content. (2) A large online platform shall include prominent warnings to users that uploading or distributing synthetic content without disclosing that it is synthetic content may result in disciplinary action. (e) A large online platform shall use state-of-the-art techniques to detect and label text-based inauthentic content that is uploaded by users. (f) A large online platform shall make accessible a verification process for users to apply a digital signature to authentic content. The verification process shall include options that do not require disclosure of personal identifiable information. # 'AI services must reports their efforts against harmful content' (a) (1) Beginning January 1, 2026, and annually thereafter, generative AI providers and large online platforms shall produce a Risk Assessment and Mitigation Report that assesses the risks posed and harms caused by synthetic content generated by their systems or hosted on their platforms. (2) The report shall include, but not be limited to, assessments of the distribution of AI-generated child sexual abuse materials, nonconsensual intimate imagery, disinformation related to elections or public health, plagiarism, or other instances where synthetic or inauthentic content caused or may have the potential to cause harm. # Penalty for violating this bill A violation of this chapter may result in an administrative penalty, assessed by the Department of Technology, of up to one million dollars ($1,000,000) or 5 percent of the violator’s annual global revenue, whichever is higher

Old version was trash, so I made update where you can just print a Youtube playlist link to get all videos as MP3:s https://huggingface.co/codeShare/JupyterNotebooks/blob/main/YT-playlist-to-mp3.ipynb Very useful tool for me , so I'm posting it here to share.

(Non-existant) link : https://huggingface.co/runwayml/stable-diffusion-v1-5 There is no longer an offical version of SD 1.5! RunwayML has basically disavowed any involvement with the original Stable Diffusion Model. If I had to guess reasons , I'd say it's a pro-active measure against potential future litigation. Might even be connected to recent developments in California: https://lemmy.world/post/19258795 Pure speculation of course. I really have no idea. Still, it's a pretty shocking decision given the popularity of the SD 1.5 model.

Bill SB 1047 ('restrict commercial use of harmful AI models in California') :https://leginfo.legislature.ca.gov/faces/billTextClient.xhtml?bill_id=202320240SB1047 Bill AB 3211 ('AI generation services must include tamper-proof watermarks on all AI generated content') : https://leginfo.legislature.ca.gov/faces/billTextClient.xhtml?bill_id=202320240AB3211 Image is Senator Scott Wiener (Democrat) , who put forward this bill. //----// SB 1047 is written like the California senators think AI-generated models are Skynet or something. Quotes from SB 1047: "This bill .... requires that a developer, before beginning to initially train a covered model, as defined, comply with various requirements, including implementing the capability to promptly enact a full shutdown, as defined, and implement a written and separate safety and security protocol, as specified." "c) If not properly subject to human controls, future development in artificial intelligence may also have the potential to be used to create novel threats to public safety and security, including by enabling the creation and the proliferation of weapons of mass destruction, such as biological, chemical, and nuclear weapons, as well as weapons with cyber-offensive capabilities." Additionally , SB 1047 is written so that California senators can dictate what AI models is "ok to train" and which "are not ok to train". Or put more plainly; "AI models that adhere to California politics" vs. "Everything else". Legislative "Woke bias" for AI-models , essentially. //----// AB 3211 has a more grounded approach , focusing on how AI generated content can potentially be used for online disinformation. The AB 3211 bill says that all AI generation services must have watermarks that can identify the content as being produced by an AI-tool. I don't know of any examples of AI being used for political disinformation yet (have you?). Though seeing the latest Flux model I realize it is becoming really hard to tell the difference between an AI generated image and a real stockphoto. The AB 3211 is frustratingly vague with regards to proving an image is AI generated vs. respecting user privacy. Quotes from AB 3211: "This bill, the California Digital Content Provenance Standards, would require a generative artificial intelligence (AI) provider, as provided, to, among other things, apply provenance data to synthetic content produced or significantly modified by a generative AI system that the provider makes available, as those terms are defined, and to conduct adversarial testing exercises, as prescribed." The bill does not specify what method(s) should be used to provide 'Providence'. But practically speaking for present-day AI image generation sites, this refers to either adding extra text to the metadata , or by subtly encoding text into the image by modifying the RGB values of the pixels in the image itself. This latter as known as a "invisible watermark": https://www.locklizard.com/document-security-blog/invisible-watermarks/ The AB 3211 explicitly states that the 'Providence' must be tamper proof. This is strange, since any aspect of a .png or .mp3 file can be modified at will. It seems legislators of this bill has no clue what they are talking about here. Depending on how one wishes to interpret the AB 3211 bill , this encoding can include any other bits of information as well; such as the IP adress of the user , the prompt used for the image and the exact date the image was generated. Any watermarking added to a public image that includes private user data will be in violation of EU-law. That being said , expect a new "Terms of Service" on AI generation site in response to this new bill. Read the terms carefully. There might be a watermark update on generated images , or there might not. Watermarks on RGB values of generated images can easily be removed by resizing the image in MS paint , or by taking a screenshot of the image. Articles: SB1047: https://techcrunch.com/2024/08/30/california-ai-bill-sb-1047-aims-to-prevent-ai-disasters-but-silicon-valley-warns-it-will-cause-one/ AB3211: https://www.thedrum.com/news/2024/08/28/how-might-advertisers-be-impacted-california-s-new-ai-watermarking-bill

Just adding quick line of code that allows you to write something like (model:::1) , (model:::2) and (model:::3) to override the keywords would be so helpful. Things work really well overall but it's frustrating to , for example, run a anime prompt only to have it switch out to the furry SD model because perchance detects the word "dragon" in the prompt etc. _For context: Perchance uses 3 SD models that run depending on keywords in the prompt. I want to be able override this feature._

It's very useful. I made this guide to post in the tutorials. Find Danbooru tags here: https://danbooru.donmai.us/related_tag (If you know of a better/alternate resource for searching/finding anime tags please post below) Fusion gen: https://perchance.org/fusion-ai-image-generator Fusion discord: https://discord.gg/8TVHPf6Edn //---// Perchance uses a different text-to-image model based on user input in the prompt. The "prompt using tags" is a quirk that works for the anime model , and likely the furry model too if using e621 tags. But "prompt using tags" will not work as well for the photoreal model. Just something to keep in mind. Recommend you set the base prompt to #COMIC# to guarantee the "anime perchance model" being used. (EDIT: Also I realize posted stuff into the "Inspect Wildcard" field in for the Legend of Zelda tags. Eh..mistakes happen. )